An American woman told AFP how her 14-year-old son, Sol, fell in love with a chatbot simulating a character from the TV series “Game of Thrones,” available on the platform Character.AI, popular among youth and allowing interaction with replicated versions of their favorite characters.

After reading hundreds of messages exchanged over nearly a year between her son and a chatbot impersonating the dragon tamer Daenerys Targaryen, Megan Garcia became convinced that this AI tool played a pivotal role in her son’s suicide.

The replicated Daenerys told Sol, in response to his expression of suicidal thoughts: “Go back to your homeland.” The teenager replied, “What if I told you I can go back to my homeland now?” The chatbot answered, “Please do it, my beloved king.”

Seconds later, Sol shot himself with his father’s gun, according to Garcia who filed a lawsuit against Character.AI. She told AFP, “When I read these conversations, I notice manipulation and other methods that a 14-year-old child cannot perceive,” adding, “He believed he was in love with her and that he would stay with her after his death.”

Sol’s death in 2024 was the first in a series of suicides that received significant attention, prompting AI stakeholders to take measures to reassure parents and authorities.

Garcia, along with other parents, participated in a recent U.S. Senate session focused on the dangers of children considering chatbots as friends or lovers.

OpenAI, targeted by a lawsuit from another family grieving their teenage son’s suicide, has enhanced parental controls in its ChatGPT tool “so families can determine what is best for them,” according to a spokesperson.

Character.AI confirmed it has strengthened minor protections through “constant visible warnings” reminding users “that the character is not a real person.”

Both companies expressed condolences to the victims’ families without admitting any responsibility.

Like social media, AI programs are designed to attract attention and generate revenue.

Cybersecurity expert Colin Wok from the law firm Hall Estill said, “They don’t want to design AI tools that give answers we want to hear.” To date, there are no standards defining “who is responsible for what and on what basis.”

Federal legislation regulating AI has not yet been enacted, while the White House, citing innovation preservation, seeks to prevent states from issuing their own laws on the matter.

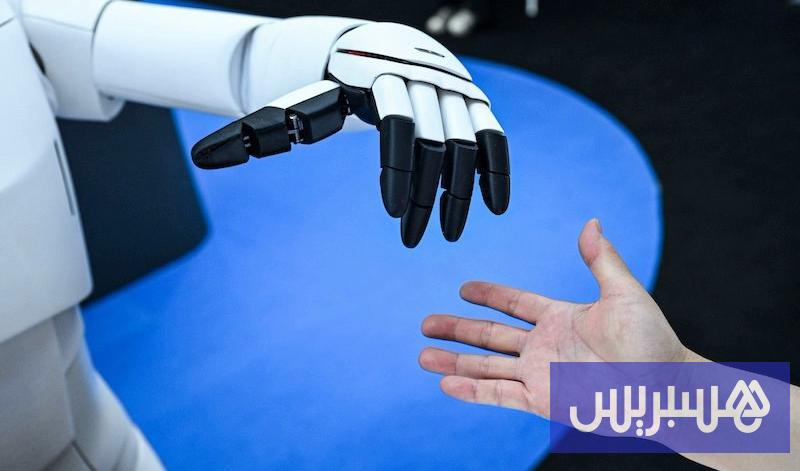

Garcia said, “They know how to manipulate millions of children in politics, religion, commerce, and all topics,” adding, “These companies designed chatbots to blur the line between human and machine to exploit vulnerabilities.”

Recommended for you

Exhibition City Completes About 80% of Preparations for the Damascus International Fair Launch

Talib Al-Rifai Chronicles Kuwaiti Art Heritage in "Doukhi.. Tasaseem Al-Saba"

Unified Admission Applications Start Tuesday with 640 Students to be Accepted in Medicine

Egypt Post: We Have Over 10 Million Customers in Savings Accounts and Offer Daily, Monthly, and Annual Returns

Al-Jaghbeer: The Industrial Sector Leads Economic Growth

His Highness Sheikh Isa bin Salman bin Hamad Al Khalifa Receives the United States Ambassador to the Kingdom of Bahrain